Getting Started

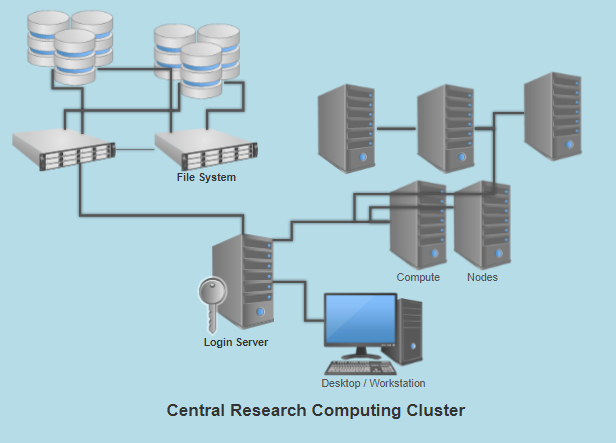

The CUHK Central Cluster consists of 60 compute nodes, 1.5 Petabyte Lustre storage, 3 servers with GPU accelerators, a quad-CPU node with 3TB memory and an Infiniband EDR networking running at 100Gpbs connecting all nodes. The Central Cluster is a subscription-based service requiring an application by the Principal Investigator (PI) of an ongoing research project.

- Overview

- 50 x compute nodes with dual Intel(R) Xeon(R) Gold 6130 CP@ 2.10GHz and 96GB memory.

- 10 x compute nodes with dual Intel(R) Xeon(R) Gold 6130 CP@ 2.10GHz and 192GB memory.

- One large memory compute nodes with 4 x Intel(R) Xeon(R) Gold 6142 CPU @ 2.60GHz and 3TB memory.

- 7 x compute nodes with quad Intel(R) Xeon(R) Gold 6128 CPU @ 3.40GHz and 128GB memory.

- 3 x compute nodes with dual Intel(R) Xeon(R) E5-2690 v3 CPU @ 2.60GHz and 128GB memory.

- 4 x GPU nodes with three Tesla V100 (32GB Memory).

- One GPU nodes with two Tesla P100 (16GB Memory).

- A Lustre File System with capacity 1.5PB.

- All compute nodes and storage nodes are interconnected by Infiniband EDR network at 100Gpbs.

- Account application

- Login to Central Cluster

- Job submission to Central Cluster and job monitoring.

- Quick and simple tasks such as script and code editing.

- No computation, compiling code, interactive jobs, long-running processes, etc.

- Each user is allowed to run 30 simple and lightweight processes. While exceeding 30 processes, older processes will be terminated.

- Lightweight tasks for job management (such as job submission, job cancellation, job accounting information, job state reporting, etc.) and file operations (such as file editing, file copying, file moving, downloading using wget, etc.) are allowed to run for at most 14 days.

- The Central Cluster is only accessible from the CUHK campus network. If you access the Central Cluster from outside the CUHK campus network, you must use the CUHK VPN.

- An SSH software is required for making the SSH connection. If you don't have your own SSH software preference, you can download PuTTY at PuTTY Site.

- Make an ssh connection to chpc-login01.itsc.cuhk.edu.hk

- Program compilation.

- Script development and testing before submitting for computation at the Central Cluster.

- Running simple test jobs.

- File transfer.

- Interactive program.

- X-display program.

- No heavy computation jobs/tasks.

- One session per user only.

- Each user is allowed to run 50 processes. While exceeding 50 processes, older processes will be terminated.

- Each process can run for a maximum of 3 days.

- Access by ssh sandbox from the Login Node (chpc-login01.itsc.cuhk.edu.hk).

- Get your Program Run by Job Submission

- File Management

- Software

- Policies

- start with a small job and gradually scale the job up to optimal.

- don't give your access credential to anyone else.

- don't run production job on the login node.

- don't store any personal data on the system.

- use a password with sufficient complexity.

- Getting Help

The Central Cluster is a collection of compute nodes, storage, and interconnected by high-throughput, low-latency network that allows researchers to run programs that cannot be done on their desktop or workstation computer.

All the nodes of the system running CentOS 7 and Slurm are the workload manager of the Central Cluster. The major components of the system are:

Before you can use the Central Cluster, you must request for an account. All application should be requested by contributing PI of the Central Cluster. Please send your account request or any question concerning this cluster to Central Cluster Support.

After getting the credential for accessing the Central Cluster, you can login to the system by secure shell (SSH) protocol. The entry of the Central Cluster is the Login Node (chpc-login01.itsc.cuhk.edu.hk) of the system.

About the Login Node (chpc-login01) FunctionFollow the instruction to enter your username and password and then click "Open" and you can connect the system.

For program compilation, testing, debugging and computative demanding but not job running, use the node sandbox.

About the sandbox node Function

The resources of the Central Cluster is managed by workload manager Slurm. By mean of an effective workload manager, system resources such as CPU and memory can be used efficiently and your jobs will be executed as far as there is suitable resources available on a fair and prioritized order.

For more details about job submission, status check and deletion, please refer to our Slurm User Guide or official site of Slurm.

Central Cluster provides three places for you to store your files. The home directory /users/your_computing_id with 20GB quota, a project directory /project/your_PI_group for permanent files and a scratch directory /scratch/your_PI_group for working files. Both project directory and scratch directory are under shared quota control within members of the same PI group.

Scratch directory are for working files. Files haven't been accessed for 4 weeks will be deleted automatically or when the overall scratch directory usage reaching 85% usage, files will be deleted automatically by reversed chronological order.

You may need to transfer your data and program files to the Central Cluster, you can use secure file transfer program WinSCP.

Standard software packages are installed on the Central Cluster, however when you find software that you need that is not available on the system yet, please inform us and we will make it available to you by doing our best effort.

If the required software incurring a cost, PI is responsible for settling the cost for using the software. You can find installed software under directory /opt/share/ and can be loaded with module command.

For more details about the software installed in Central Cluster, please refer to Software page.

We want you can maximize the potential of the Central Cluster. But there are some basic rules for using the system in order to make the system more secure, robust and stable. So please

Whenever you have any questions or stuck in using the system, please don't hesitate to let us know by sending your questions to us.(centralcluster@cuhk.edu.hk)